The Yelp Big Data Dive: Part 2 — Bringing Data to Life

In the first part of this series, we delved into setting up a robust Big Data ecosystem using Docker Compose. Let’s explore how I brought Yelp’s extensive dataset to life, transforming raw data into actionable insights with Python and Streamlit.

Processing Yelp Data with Jupyter Notebook

The first step was to ingest Yelp’s JSON files into our Hadoop Distributed File System (HDFS). This process was crucial for making the data accessible for large-scale processing. I used Jupyter Notebook integrated with PySpark for this task.

Here’s a glimpse of the code I used for adding the JSON files into HDFS:

from hdfs import InsecureClient

import os

client = InsecureClient(url='<http://namenode:50070>', user='hdfs')

hdfs_path = '/user/sebas'

local_path = '/home/jovyan/work/yelp_dataset'

for filename in os.listdir(local_path):

if filename.endswith('.json'):

local_file = os.path.join(local_path,filename)

hdfs_file = os.path.join(hdfs_path,filename)

client.upload(hdfs_file, local_file)This code what does is connects to the HDFS (InsecureClient(url=’<http://namenode:50070>', user=’hdfs’) and adds the JSON files in my local system to the HDFS using a for loop.

After this, I had to create Hive tables to use SQL-like queries for the Dashboard. Here’s a glimpse of the code I use for creating the Hive tables:

# Initialize a Spark session with Hive support

spark = SparkSession \\

.builder \\

.appName("Hive Table Creation") \\

.config("spark.sql.warehouse.dir", "hdfs://namenode:8020/user/hive/warehouse") \\

.enableHiveSupport() \\

.getOrCreate()

# Read the JSON file

user_json = "hdfs://namenode:8020//user/sebas/yelp_academic_dataset_user.json"

df_user = spark.read.json(user_json)

review_json = "hdfs://namenode:8020//user/sebas/yelp_academic_dataset_review.json"

df_review = spark.read.json(review_json)

business_json = "hdfs://namenode:8020//user/sebas/yelp_academic_dataset_business.json"

df_business = spark.read.json(business_json)

# Infer the schema and print it

print("User Schema")

df_user.printSchema()

print("Review Schema")

df_review.printSchema()

print("Business Schema")

df_business.printSchema()

# Create a temporary view

df_user.createOrReplaceTempView("temp_user_table")

df_review.createOrReplaceTempView("temp_review_table")

df_business.createOrReplaceTempView("temp_business_table")

# Create a Hive table from the temporary view

spark.sql("CREATE TABLE user AS SELECT * FROM temp_user_table")

spark.sql("CREATE TABLE review AS SELECT * FROM temp_review_table")

spark.sql("CREATE TABLE business AS SELECT * FROM temp_business_table")

# Stop the Spark session

spark.stop()The code above uses Spark to connect to the Hive warehouse we initiated in my Docker container. After its connection, it reads the JSON files stored in my HDFS and creates Spark data frames based on those JSON files. Using those data frames, I created Hive tables inside the Hive warehouse, which helped create SQL-like queries for the project.

You can go to my GitHub repository for the rest of the Jupyter Notebook.

Connecting and Creating the Spark Application

Before diving into the data, I needed to connect with our Big Data ecosystem.

I created a data.py file that created the Spark session and some other Python functions I needed to import to the Streamlit dashboard.

Here’s a look at the Python function I developed to connect and create the Spark application:

# Spark Session

def spark_session():

if 'spark_initialized' not in st.session_state:

spark = SparkSession \

.builder \

.appName("Streamlit-Data") \

.master("local") \

.config("spark.sql.warehouse.dir", "hdfs://namenode:8020/user/hive/warehouse") \

.enableHiveSupport() \

.getOrCreate()

st.session_state['spark_initialized'] = True

st.session_state['spark'] = spark

else:

spark = st.session_state['spark']

return sparkThis code laid the groundwork for all subsequent data operations, ensuring seamless integration with our Dockerized Big Data environment.

The other part of the functions on the Python file can be found here.

Crafting the Streamlit Dashboard

With our data now accessible, the next step was to create a user-friendly interface to interact with this data.

The dashboard was divided into two different tabs, but to make a multipage Streamlit app, you need to follow this structure (its explanation can be found here):

Yelp.py # This is the file you run with "streamlit run"

└─── pages/

└─── 1_🌍_Business_Insights.py # This is the first page

└─── 2_📊_Target_Ads.py # This is the second pageAs it is shown in the Streamlit docs, it needs a Home.py (In my case, Yelp.py) file to run the Streamlit dashboard; here is a piece of the code for the home python file I used; this one explains what the dashboard is about.

import streamlit as st

st.set_page_config(

page_title="Yelp Big Data Analysis",

page_icon="yelp_icon.png",

)

st.write("# Deciphering the Voice of Yelp! :face_with_monocle:")

st.sidebar.success("Select any of the pages above!")

st.markdown(

"""

## Elevate Your Restaurant's Visibility with Yelp's Streamlit Dashboard

Welcome to Yelp's exclusive Streamlit web dashboard, a revolutionary tool designed to transform how Yelp approaches digital advertising. In the ever-competitive entrepreneurial world, our dashboard offers Yelp-listed businesses a unique advantage – the ability to make strategic, data-backed decisions for targeted advertising and business analysis.

### Dashboard Overview

Our Streamlit dashboard, tailored using Yelp's extensive business listings, is segmented into two insightful sections:

1. **Business Insights 🌍**: This section is a treasure trove for the Yelp marketing and advertising team seeking to understand what businesses need a better engagement on their Cost-Per-Click plan. It analyzes various business categories in-depth, focusing on review counts and public perception. By comparing high and low review counts across categories and businesses where State and City businesses are located, you can identify market trends, gauge customer interest, and understand where Yelp can target their team to increase user Cost-Per-Click engagement.

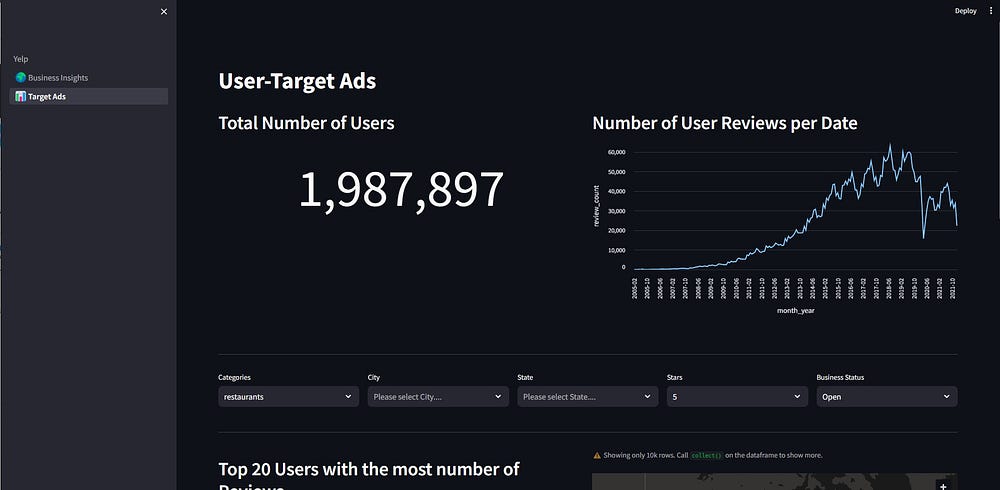

2. **Target Ads 📊**: Leveraging Yelp's rich user reviews and preferences database, the 'Target Ads' section enables Yelp to create highly effective advertising campaigns. By analyzing past review categories, we help Yelp identify potential customers most likely interested in certain categories. This targeted approach ensures that the ads reach the most relevant and responsive audience, increasing the likelihood of engagement and conversion.

"""

)

For the other two pages, you can find the code here:

Targeting Ads: A Data-Driven Approach

With the insights gathered from our dashboard, we can create more effective ad campaigns by:

- Targeting Popular Categories: By identifying popular categories, Yelp can target ads towards businesses in these categories, increasing the likelihood of ad clicks and conversions.

- Personalized Ad Campaigns: Understanding user preferences allows for personalized ad campaigns, which are more likely to resonate with the target audience, leading to higher engagement.

- Optimized Ad Placement: Analyzing user interaction patterns helps strategically place ads where they are most likely to be clicked.

Impact: A Game-Changer for Yelp’s Ad Strategy

This dashboard isn’t just about pretty graphs and data points; it’s a tool that can revolutionize how Yelp approaches advertising. By honing in on the most relevant business categories and understanding user behavior, Yelp can target ads more effectively, potentially increasing click-through rates and CPC revenue.

Conclusion: Data Science in Action

What began as a complex dataset has transformed into a strategic asset for Yelp, thanks to the power of Big Data technologies and some Python magic!

To check the whole project code, follow my GitHub account and see the project repository here!

Let me know what you think of this in the comments!